Homegrown Terrorism and the Small N Problem

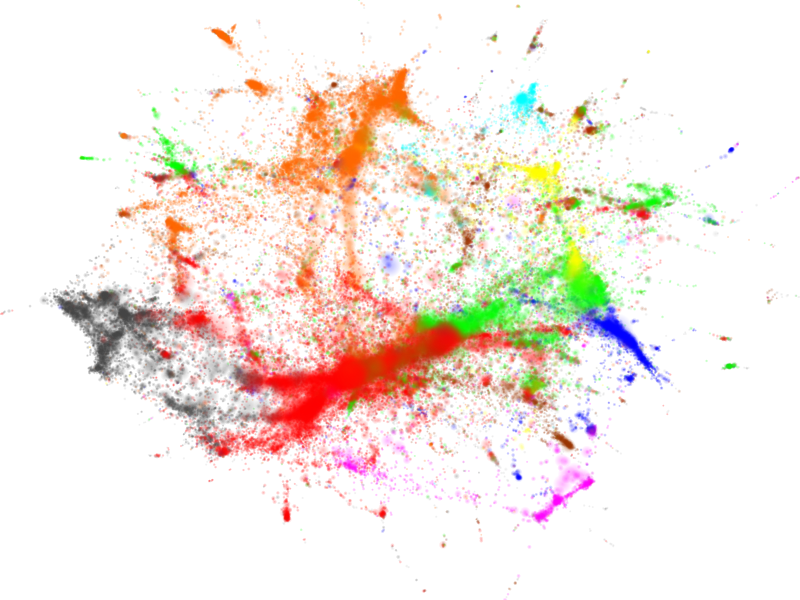

I just finished the new RAND report on homegrown terrorism in the United States, entitled "Would-Be Warriors: Incidents of Jihadist Terrorist Radicalization in the United States Since September 11, 2001," and it is a fascinating analysis of the paths to radicalization by American citizens over the past near-decade. This paper is clearly extremely timely given the seemingly sudden rise in domestic radicalization toward jihadism. As the report notes, "the 13 cases in 2009 did indicate a marked increase in radicalization leading to criminal activity, up from an average of about four cases a year from 2002 to 2008." Given this fact, and the more recent Faisal Shahzad case, and the overall increase in attacks against the U.S., the salience of homegrown terrorism is as high as ever.

Previously, I have written skeptically about the notion that domestic terrorism is—in fact—on the rise. This apparent trend may be better described as a regression toward to mean level of this activity over a longer time period. To the author's credit, Brian Michael Jenkins, he assuages any alarmist notions of a sudden and abnormal rise in domestic terrorism by reviewing the extensive history of domestic terrorism incidents that occurred in the United States during the 1960's and 70's.

After reading the RAND report I was not necessarily dissuaded rom my position that the current spike is nothing more than a mean regression; however, I was convinced by this report that the stakes have changed considerably since the previous decades and thus this subject deserves considerable attention going forward. What the RAND report suffers from, and many other reports on domestic terrorism, is a small N problem, and in order to more accurately study this phenomenon efforts must be made to overcome these issues.

To be clear, having a small number of observations with respect to domestic terrorism and radicalization is a "good" problem. National security benefits from the fact that these are rare events, and we are thankful that this is the case. That said, because the RAND analysis consists of only 46 observations over an 8 year period any conclusions must be tempered by this fact. For example, when describing who the terrorist are the author states (emphasis mine):

Information on national origin or ethnicity is available for 109 of the identified homegrown terrorists. The Arab and South Asian immigrant communities are statistically overrepresented in this small sample, but the number of recruits is still tiny. There are more than 3 million Muslims in the United States, and few more than 100 have joined jihad—about one out of every 30,000—suggesting an American Muslim population that remains hostile to jihadist ideology and its exhortations to violence.

We know, however, that this final assertion is not true; specifically, with regard to the numbers. The numbers, at best, only support the claim that domestic radicalization is very rarely observed. It does not suggest anything about the internal disposition of American Muslims. While this may actually be the case, simply by not observing a phenomenon cannot support this claim. The cliché, "The absence of evidence is not evidence of absence," is particularly applicable to small N problems. If we are actually interested in understanding the sentiment of American Muslims then traditional survey work would be quite applicable.

Clearly, the primary problem is that because these are rare events we simply do not have enough data to build good statistical models. As such, whenever endeavoring to study this subject al attempt to retain as much applicable data should be made. In the case of the RAND report this was not done, as the data were thinned to include only those cases that resulted in indictments in the U.S. or abroad. While this is a minimal limitations, the underlying assumptions is that paths and intents for radicalization is somehow different for those who are indicted versus those that are not. This seems dubious at best, and therefore a better approach would be to include all possible observations, and then using a more theoretically unbiased method for data cleansing (such as a coarsened exact matching) to isolate those observations of interest. This seems to follow a troubling trend in terrorism studies of selection on the dependent variable.

I returned to NYC on Friday from the

I returned to NYC on Friday from the