Thoughts on Measuring Social Influence

Over the past few weeks I have had several conversations with people interested in understanding how to understand the dynamics of influence in online discourse. Clearly, there is a social network aspect to this, as in these platforms provide the medium for these exchanges to take place and in most cases users are only subject to information existing on their network (the notable exception being Twitter, though most users still only pull information from those they are following). The primary question is: how does online social activity manifest itself in offline behavior? For example, to what extent to do social networking platforms influence voting behavior; or, how do reviews of recently released movies posted to Twitter affect an individual's likelihood to see it in the theater; or, are online discourses a meaningful path to violent radicalization?

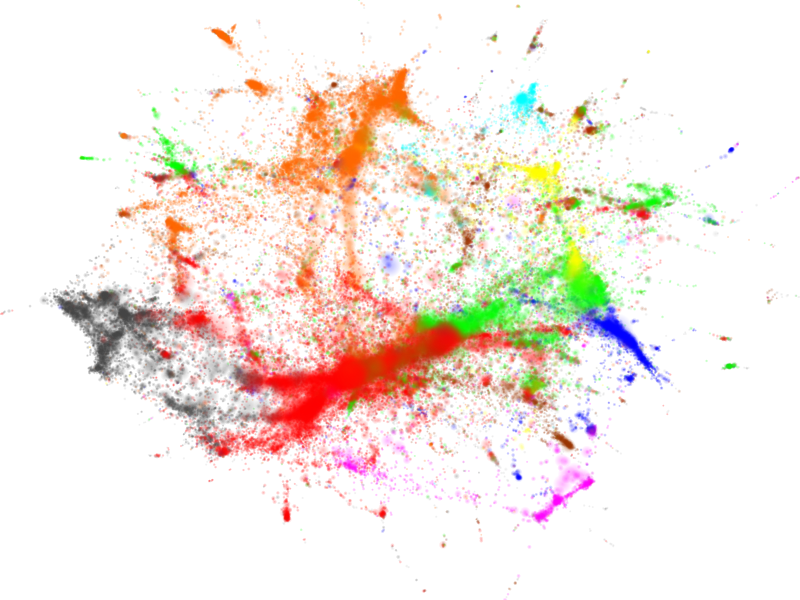

From an analytical perspective, the difficulty is that there are no reliable ways of measuring the process by which this influence occurs. Intuitively, we know that influence is happening online, but this process is largely hidden within the context of online exchanges. As we often represent online social interactions as networks, and because much of the relevant data will have a network form, it may be useful to begin by framing this problem in terms of a graph.

In these terms, there are at least two ways one might approach this problem. First, to measure influence we might attempt to identify influential individuals, and subsequently measure their activity. An important assumption here is that people are influenced in some relatively uniform way as a function of receiving information from those they "trust". Over time, and assuming a constant rate of influence, as individuals self-organize to these influencers we could infer individual level of influence. A second approach is to attempt to measure signals related to the digestion of information. That is, rather than assume influence comes from key actors do the reverse, assume influence comes from pivotal pieces of information. In this case, these signals might come in the form of first-, second-order, etc., transmission of these key bits of information from their source, or the infusion of a some bit of information into a network from multiple sources. As with the influential actors approach, by observing these signals over time could approximate changes in preference and thus infer influence.

In the context of these competing approaches this problem becomes a philosophical one, and exemplifies the fundamental differences in node versus edge analyses in networks. By assuming individuals drive influence we are taking a node-centric approach, wherein actors have some valuation for information received online, and are therefore attracted to those individuals that maximize this utility. The edge-centric approach assumes that content is valued over source, and that the information contained on some edge is the primary engine to influence. It has always been my contention that too much time is spent focused on nodes in network analysis. In fact, the problem of measuring influence in online social networks is an excellent example of the value of edge-centric analysis.

As stated, this is essentially a measurement problem—we need a way to quantify information digestion, but lack an appropriate metric. Consider, a social network with some fixed number of nodes. By focusing on the characteristics of the nodes we are inherently limiting our analytical scope. While the most "central" actors may change over time, we can never achieve a meaningful measure of influence by simply examining the structural characteristics of these nodes. Influence can only occur as a function of edges; therefore, it they must be the primary unit of analysis in this endeavor. Perhaps this is why I have always been a big fan of the line graph transformation.